a) BINOMIAL DISTRIBUTION

Suppose there are two distinct outcomes of an experiment ('Kopf oder Zahl') with probabilities P(Kopf)=p, P(Zahl)=1-p and we repeat the experiment N times.

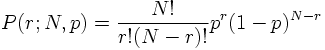

The probability to obtain r times 'Kopf' is then given by:

b) POISSON DISTRIBUTION

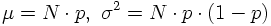

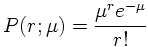

If in the binomial distribution the probability of a single event becomes small and the number of trials becomes large so that μ=Np remains finite then the binomial distribution approaches a Poisson distribution which is described by one single parameter μ:

Example: Radioactive Cs(137) nuclei have a half-life of 27 years. The decay probability per unit time for a single nucleus is then λ=ln2/27 years ≈ 8.2 10-10 1/s. In e.g. 1 μg Cs(137) we have N=1015 nuclei (= trials). Therefore we expect μ=N λ ≈ 8.2 105 decays/s and the number of observed events is distributed according to a Poissonian distribution with parameter μ. Similar arguments apply to particle scattering.

c) GAUSSIAN DISTRIBUTION AND CENTRAL LIMIT THEOREM

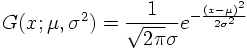

The Gaussian distribution for a continuous random variable x is characterized by two variables which represent the mean value and the variance of the distribution:

The Gaussian distribution plays a central role in statistical data analysis due to the

'CENTRAL LIMIT THEOREM':

If xi are random variables with p.d.f.'s fi(xi), mean values μi and finite variances σi² then the sum s=Σi xi for large i is a random variable with Gaussian p.d.f. G(s;s0,σ²) with mean s0 = Σi μi and variance σ² = Σi σi².

Consequences:

a) In the limit of large μ the Poisson distribution becomes a Gaussian distribution.

b) If a measurement is influenced by a sum of many random errors of similar size the result of the measurement is distributed according to a Gaussian distribution.

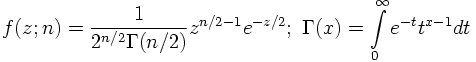

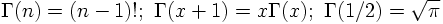

d) χ² DISTRIBUTION

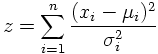

The χ² distribution derives its importance from the fact that a sum of squares of independent Gaussian distributed random variables divided by their variances

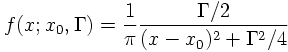

e) BREIT-WIGNER DISTRIBUTION

If a particle is unstable, i.e. its lifetime is finite, its energy (mass) x has a not one well-defined value but is spread according to a Breit-Wigner distribution

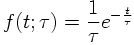

f) EXPONENTIAL DISTRIBUTION

The proper decay times t for unstable particles with lifetime τ are distributed according to the p.d.f.:

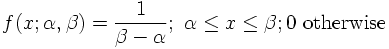

g) UNIFORM DISTRIBTUION

A very important p.d.f. for practical purposes is the uniform p.d.f.:

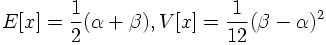

The mean value and variance for the uniform distribution are

Starting from a random variable x with p.d.f. f(x) we define a new random variable y=F(x), given by the cumulative distribution of f(x). Independent of f(x) the new variable y is uniformly distributed between 0 and 1!